The previous version of the Guestbook stores the model data in a sqlite3 database. This version provides a new modularized version of the model adapter to support querying a PostgreSQL server. To view the code, change into its directory within the repository.

cd guestbook-src/02_mvp_modules_sqlite3

The directory structure shown below shows only the files of interest for this lab. There is a .env.sample file which will help with configuring the environment variables for the database connection. In addition, in gbmodel directory there is a new postgresql implementation (model_sql_postgres.py), which has to be enabled in the initialization file for the package (__init__.py)

02_mvp_modules_sqlite3 ├── gbmodel │ ├── __init__.py │ └── model_sql_postgres.py └── .env.sample

Use AWS RDS to create a postgresql instance to which we will connect with Python.

Go to the AWS RDS Service Page

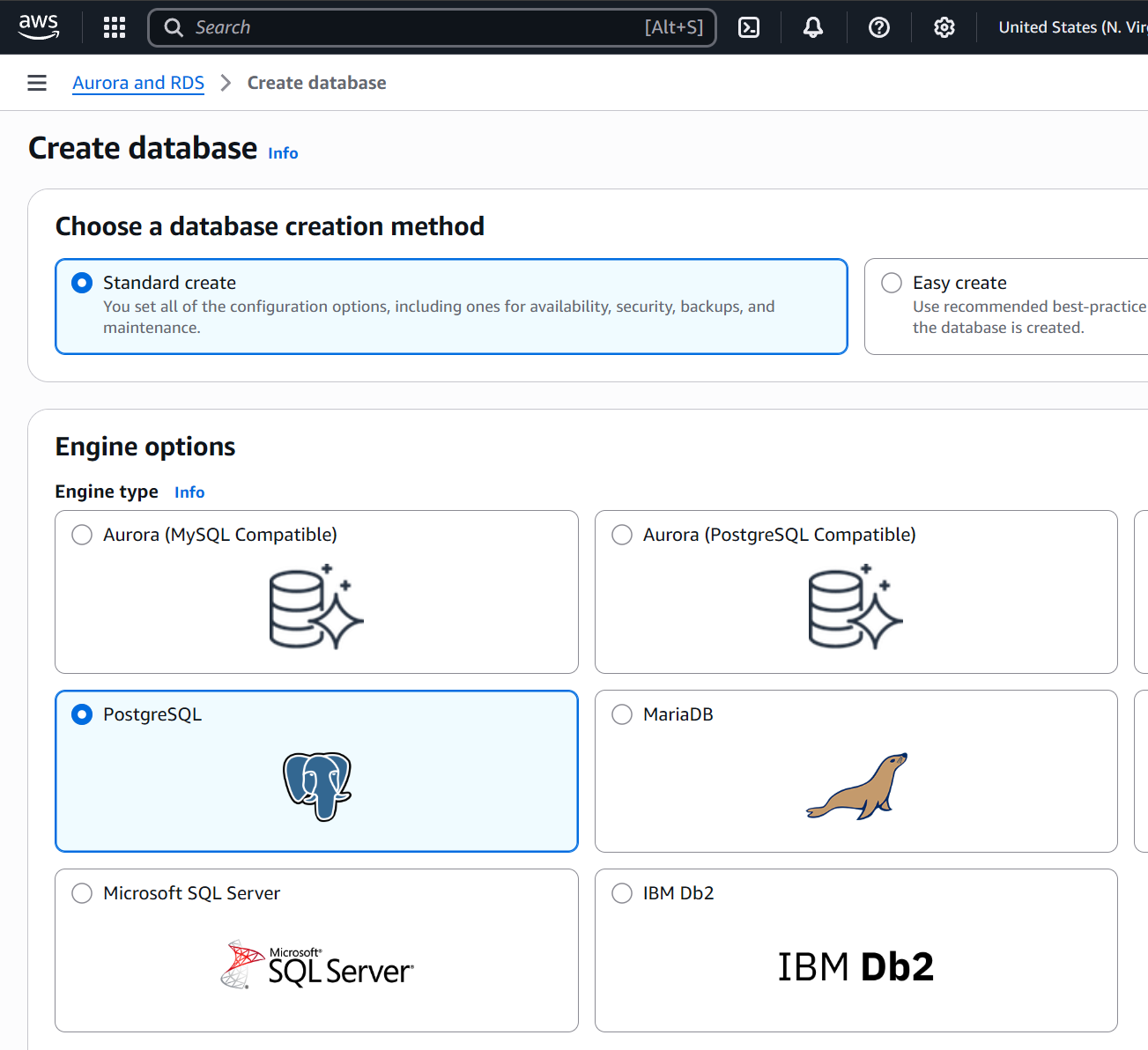

Search RDS and then under Aurora and RDS click databases and create database.

Create a PostgreSQL instance

Configure the following options for PostgreSQL:

Under Templates choose Sandbox

Under Settings, configure:

- DB instance identifier:

guestbook - Master username:

guestbook - Master Password:

guestbook(or custom but update.envlater)

Under DB instance class, configure

- Select Burstable classes (includes t classes)

- Select

db.t3.micro

Under Connectivity

- Select Public access: yes

- VPC security group (firewall), select create new

- Under New VPC security group name configure:

guestbook-db

Under Additional configuration, configure:

- Initial database name:

guestbook - Uncheck Enable automated backups

- Uncheck Enable encryption

This will turn off backups, which is not normally recommended, but will make the database deploy faster for this lab.

Click create database

You will now need to wait approximately 4 minutes for the database to be available.

Get server address

Wait until Info changes to Modifying or Available.

Scroll down to the Connectivity & security section and copy the Endpoint field.

It will look similar to: guestbook.xxxx.us-east-1.rds.amazonaws.com.

You now have all the information to connect from an external program like Python.

To explore the content of the database you can connect with a Database Tool, or directly use the cloud shell.

Open Cloud Shell

For this lab, we will use AWS Cloud Shell to first test the database connection. Click on the Cloud Shell icon from the AWS console.

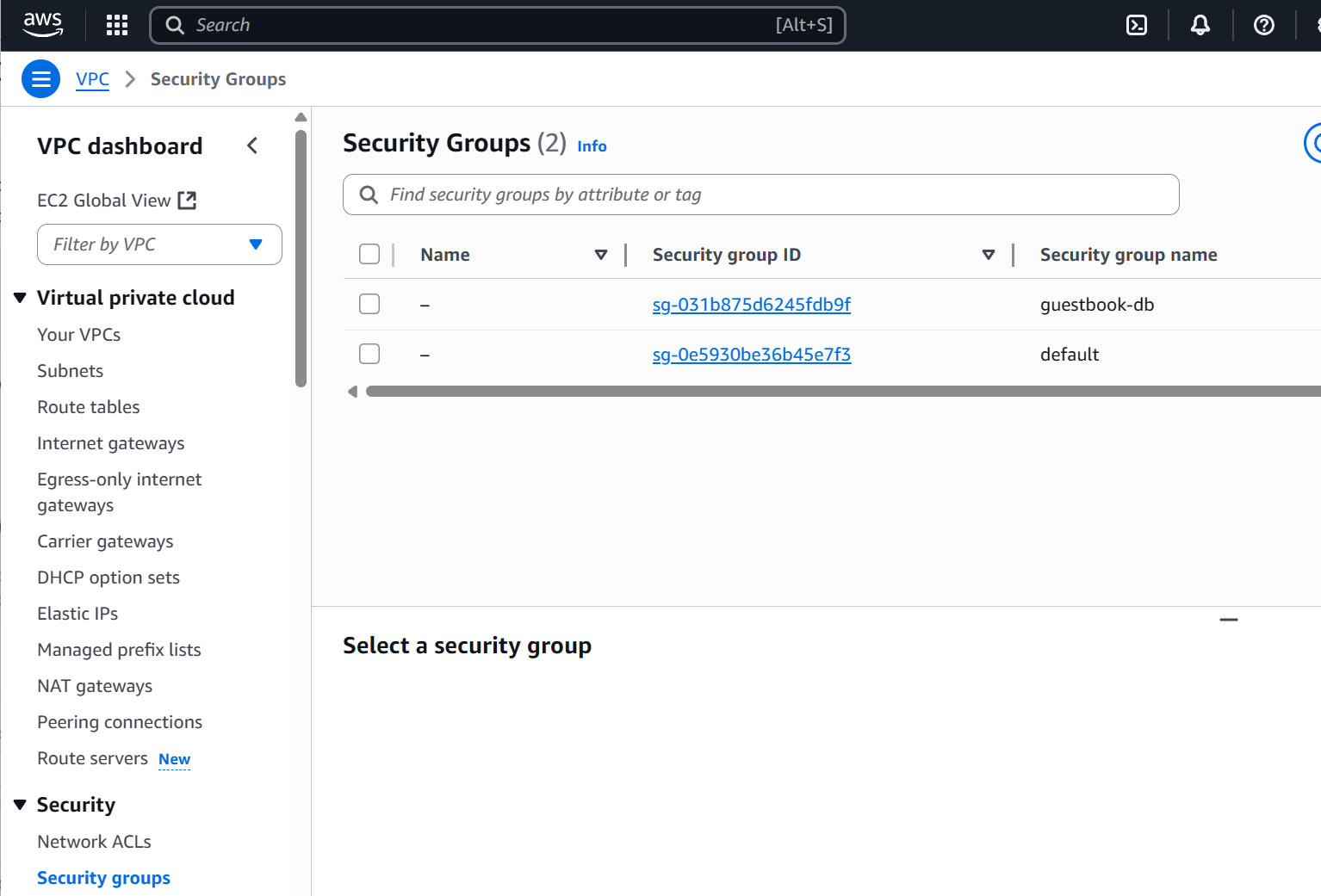

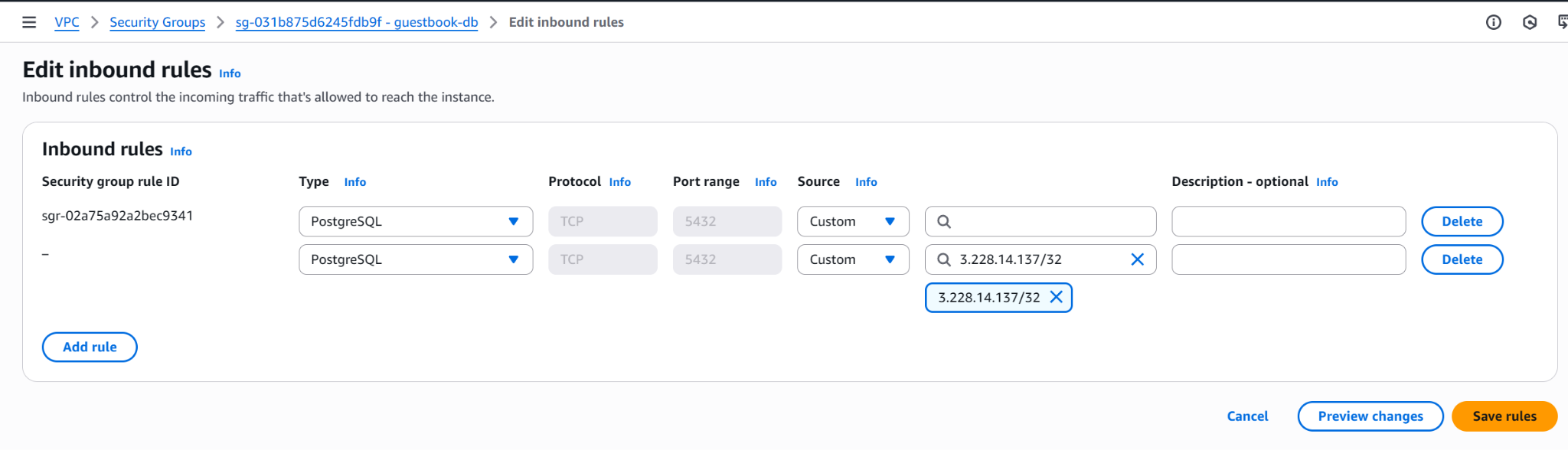

We need to configure the firewall / Security group to allow connection from the shell.

Perform the following command to obtain the IP address of the shell.

curl http://ipecho.net/plain ; echo

Configure VPC Security Group

Search for VPC and then go to the security group

Click on guestbook-db security group and then add a new inbound rule with the ip from your cloudshell.

Connect to PostgreSQL

psql -h [DB_HOST_ENDPOINT] -U guestbookIf connected successfully go to the next step. Keep your cloudshell, we will use it later.

We will setup the changes required to connect to a PostgreSQL server instead of a local sqlite3 database.

In__init__.py you can see that model_backend can be switched with an environment variable.

gbmodel/__init__.py

import os

from dotenv import load_dotenv

load_dotenv()

model_backend = os.getenv('GUESTBOOK_SERVICE', 'sqlite3')As the package constructor shows, we have implemented a new postgres backend. The code for the backend is in model_sql_postgres.py. The part that implements the DDL (Data Definition Language) is shown below. As the code shows, the abstract base class Model is first imported along with the psycopg package. There is no variable for a file name. Instead, it was replaced by the creation of the database connection string, which is assembled by loading information from environment variables. We are using the dotenv package, to simplify loading environment variables from a .env file. If you compare the code with the sqlite3 version you will notice that this version uses the Python with statement that allows to not explicitly call the close cursor and close connection methods, as the with block will do it for us. Additionally, we had to add a connection.rollback() in the except code, before being able to add a change in the current transactions. PostgreSQL supports transactions even for DDL.

gbmodel/model_sql_postgres.py

from datetime import datetime

from .model import Model

import psycopg

import os

from dotenv import load_dotenv

load_dotenv()

DB_CONNECTION = "host=%s port=%s dbname=%s user=%s password=%s" % (os.getenv('DB_HOST'), os.getenv('DB_PORT'), os.getenv('DB_NAME'), os.getenv('DB_USER'), os.getenv('DB_PASS'))

class ModelSqlPostgres(Model):

def __init__(self):

# Make sure our database exists

with psycopg.connect(DB_CONNECTION) as connection:

with connection.cursor() as cursor:

try:

cursor.execute("SELECT count(*) FROM entries")

except psycopg.errors.UndefinedTable:

connection.rollback()

cursor.execute("CREATE TABLE entries (id serial, name text, email text, signed_on timestamp, message text)")

connection.commit().env

DB_HOST=localhost

DB_PORT=5432 # Default PostgreSQL port

DB_NAME=guestbook

DB_USER=guestbook

DB_PASS=guestbook

GUESTBOOK_SERVICE=postgresThe rest of the code in model_sql_postgres.py implements the DML (Data Manipulation Language) part of the backend. As the code shows, the select() method simply returns all rows from the entries table as a list of lists. We had to add an id column, to have a primary key. The datetime object conversion is done automatically as long as the type of data is timestamp.

The insert() method takes the (name, email, message) strings, then generates a timestamp (datetime.now()) before inserting them all into the entries table.

gbmodel/model_sql_postgres.py

def select(self):

"""

Gets all rows from the database

Each row contains: name, email, signed_on, message, id

:return: List of lists containing all rows of database

"""

with psycopg.connect(DB_CONNECTION) as connection:

with connection.cursor() as cursor:

cursor.execute("SELECT name, email, signed_on, message, id FROM entries")

return cursor.fetchall()

def insert(self, name, email, message):

"""

Inserts entry into database

:param name: String

:param email: String

:param message: String

:return: True

:raises: Database errors on connection and insertion

"""

params = {'name':name, 'email':email, 'datetime':datetime.now(), 'message':message}

with psycopg.connect(DB_CONNECTION) as connection:

with connection.cursor() as cursor:

cursor.execute("INSERT INTO entries (name, email, signed_on, message) VALUES (%(name)s, %(email)s, %(datetime)s, %(message)s)", params)

connection.commit()

return True

On the machine you are running the web application on. Open your terminal, change into the repository that contains the code.

cd guestbook-src/02_mvp_modules_sqlite3

As before, create a Python 3 virtual environment and install the packages specified in requirements.txt (e.g. flask)

We have two additional requirements in order for postgresql to work with python

requirements.txt

psycopg[binary] # library to connect to postgresql

python-dotenv # top simplify loading of environment variables from .env filespython3 -m venv env source env/bin/activate pip install -r requirements.txt

Or on windows with PowerShell

python -m venv env .\env\Scripts\Activate.ps1 pip install -r requirements.txt

Then, start the server.

python app.py

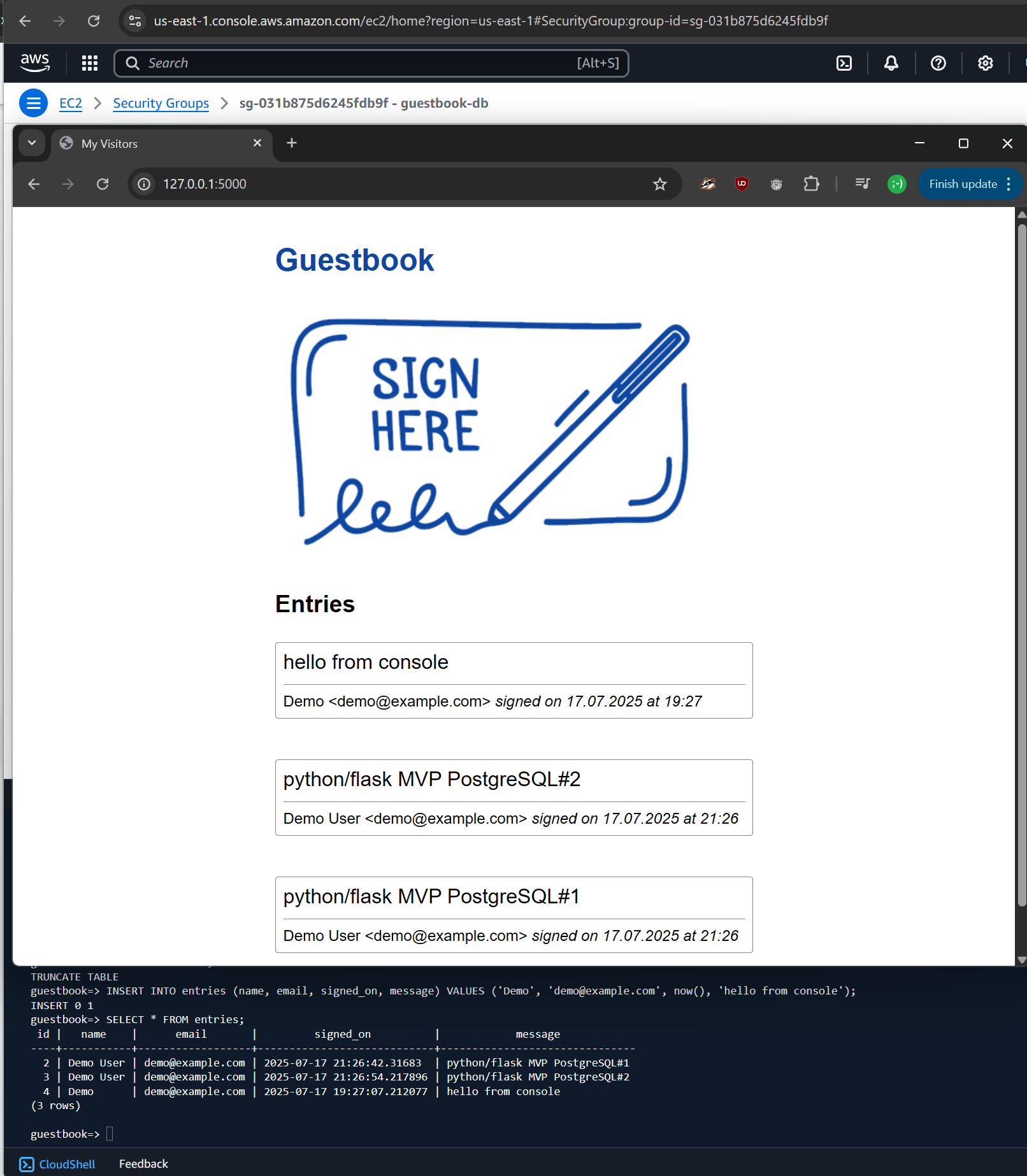

Visit the site as before and add an entry that includes your email address in it and the message "python/flask MVP PostgreSQL#1". Then, type "Ctrl+c" to stop the server.

Start the server again. The original message should still appear as it has been read from the database server. Add another entry using your email address and the message "python/flask MVP PostgreSQL#2".

As we successfully connected with the app we should now have a table and some data.

Explore Data

It is important to not forget the ; at the end of an SQL statement.

guestbook=> SELECT * FROM entries;Add new Data

Adapt to your information

guestbook=> INSERT INTO entries (name, email, signed_on, message) VALUES ('Demo', 'demo@example.com', now(), 'hello from console');Take a screenshot with the app and the console and your data.

Then, type "Ctrl+c" to stop the server again.

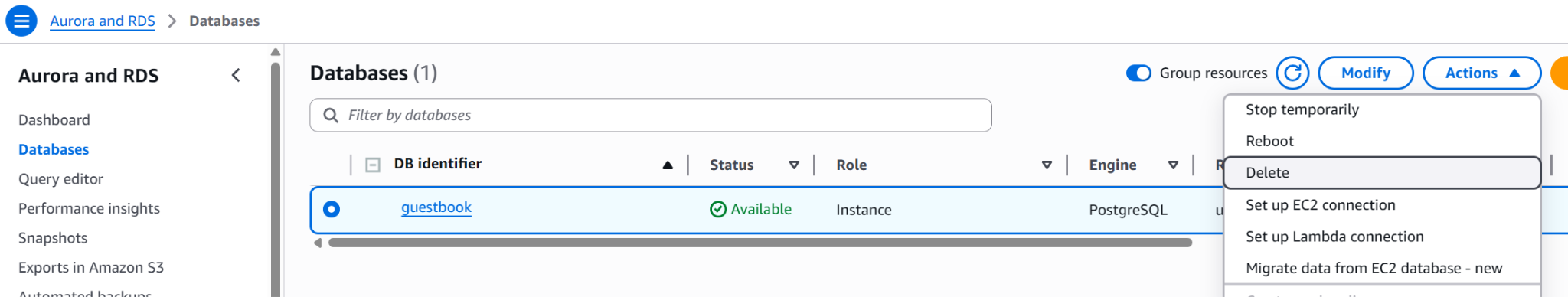

Delete and confirm

Under databases select your instance and click actions delete